Arm TechCon 2019: Arm Neoverse Steps Closer to the AI Edge

The convergence of Fifth Wave of Computing technologies – artificial intelligence (AI), 5G networks and the Internet of Things (IoT) – continues to fuel incredible change and drive new models of data consumption. Consider just the IoT: Though we are still in the early days, we’ve already seen IoT evolve as a global network of tiny sensors to expand to include higher-performance endpoints, from intelligent video sensors to autonomous machines.

As the IoT grows to fuel a global digital transformation, the data tsunami hurtling upstream to the cloud is causing cracks in a network infrastructure long optimized for downstream distribution. This is driving an urgent need for greater compute distribution throughout the global internet infrastructure, and growing demand for Arm Neoverse compute solutions. The ecosystem has certainly responded to this challenge, and I’m very pleased with the progress we’ve together made over the last year to turn our original vision for what Neoverse could achieve into what it is fast becoming.

AI spreads from the cloud and “Zeus” is waiting

While looking back can be good, I’d like to take a moment to look forward—at how AI needs to become much less centralized.

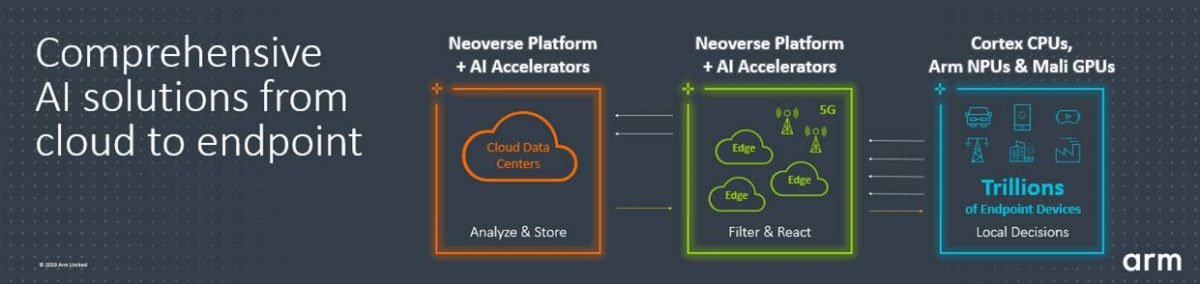

Today, most AI heavy lifting is done in the cloud due to the concentration of large data sets and dedicated compute, especially when it comes to the training of machine learning (ML) models. But when it comes to the application of those models in real-world inferencing near the point where a decision is needed, a cloud-centric AI model struggles. Data travelling thousands of miles to a datacenter for model comparison can encounter lots of latency, so there’s no guarantee a decision will still be useful by the time it’s returned. When time is of the essence, it makes sense to distribute the intelligence from the cloud to the edge.

It goes without saying that Arm and our ecosystem partners are well-positioned to enable AI across all tiers of the internet thanks to the range of solutions on the market – from Arm Neoverse platforms and AI acceleration technologies for cloud and infrastructure, to AI-enabled Arm CPUs, NPUs, and GPUs catering to any endpoint need.

But we can always do more, which is why we recently announced adding the bfloat16 data format to the next revision of the Armv8-A architecture. This important enhancement significantly improves training and inferencing performance in Arm-based CPUs, and since we like to move fast here at Arm, we’re adding bfloat16 support into our Neoverse “Zeus” platform due out next year!

Expanding compute at the edge

As demand for decision making moves to the edge, AI will play a dual role. In addition to making timely decisions based on the information contained within the data, when massive amounts of data need to be directed to the right location, AI needs to help with everything from traffic management to packet inspection. This is both a training and inferencing problem, and conventional compute systems just can’t keep up. What has traditionally been a network bridge at the edge of the internet is fast becoming an intelligent computing platform, ultimately leading to the emergence of what we’re calling the AI Edge and a $30 billion compute silicon TAM by 2025.

Performing AI at the edge has many benefits: it significantly reduces backhaul to the cloud, cuts latency, and improves reliability, efficiency, and security. In a world where models may need to evolve in real-time as insights from global device deployments come fast, this is all not just a nice-to-have.

Enabling the AI Edge: Project Cassini

Key to the successful deployment of applications exploiting the AI Edge will be providing diverse solutions covering a broad spectrum of power and performance requirements. One vendor’s solutions will not fit all needs. In addition to being AI-centric, the AI Edge needs to be cloud-native, virtualized (VMs or containers), and support multitenancy. Most importantly, it needs to be secure.

The current solutions that form the infrastructure edge come from an incredibly diverse ecosystem that is transitioning fast to meet these new demands. To help address this AI Edge transition, Arm is announcing Project Cassini, an industry initiative focused on ensuring a cloud-native experience across a diverse and secure edge ecosystem.

Together with our ecosystem partners and targeted to the infrastructure edge, Project Cassini will develop platform standards and reference systems upon which a cloud-native software stack can seamlessly be deployed within a standardized Platform Security Architecture (PSA) framework now extended to the infrastructure edge. One area I would like to specifically highlight within Project Cassini is the work Arm and our ecosystem partners are doing on security. Two years ago, Arm announced PSA to enable companies to design security features against a common set of requirements that reduce the cost, time, and risk associated with building product-level IoT security. Today, Project Cassini builds on that momentum by extending PSA to the infrastructure edge with the aim to standardize all fundamental security needs.

An evolving challenge

The world of one trillion IoT devices we anticipate by 2035 delivers infrastructural and architectural challenges on a new scale. As I’ve pointed out, our technology must keep evolving to cope. On the edge computing side, it means Arm will continue to invest heavily in developing the hardware, software, and tools to enable intelligent decision-making at every point in the infrastructure stack. It also means using heterogeneous compute at the processor level and throughout the network – from cloud to edge to endpoint device.

Find out more about how Arm Neoverse E1 and Arm Neoverse N1 processors deliver world-class performance, security, and scalability for next-generation infrastructure.

Any re-use permitted for informational and non-commercial or personal use only.