Understanding the intricacies of software timing behaviour is crucial, especially in safety-critical systems and systems with real-time requirements. While analysing timing on single-core processor architecture might seem straightforward, the landscape becomes notably more complex when dealing with multiple cores. Here, contention for shared resources such as caches, buses, and peripherals add layers of uncertainty to the timing analysis. In this blog post we describe the on-chip bus architecture of the GR765 octa-core LEON/RISC-V microprocessor. This infrastructure is designed to improve the system performance, minimize multi-core interference, and simplify the worst-case execution time analysis.

Interference Challenges

The architecture of many modern multi-core microprocessors imposes some key challenges:

- Bandwidth: Cores competing for simultaneous access to shared resources may result in bottlenecks, leading to delays or inefficiencies in resource utilization.

- Quality of Service (QoS) Management: Ensuring fair and predictable access to the bus for diverse software instances with varying criticality proves challenging.

These constraints are particularly critical in scenarios where exceeding execution time thresholds could trigger failures. Consequently, software developers must carefully consider these limitations to ensure that their applications behave as intended. Moreover, accommodating these constraints often entails longer development cycles and substantial performance trade-offs.

The GR765 Striped Bus Architecture

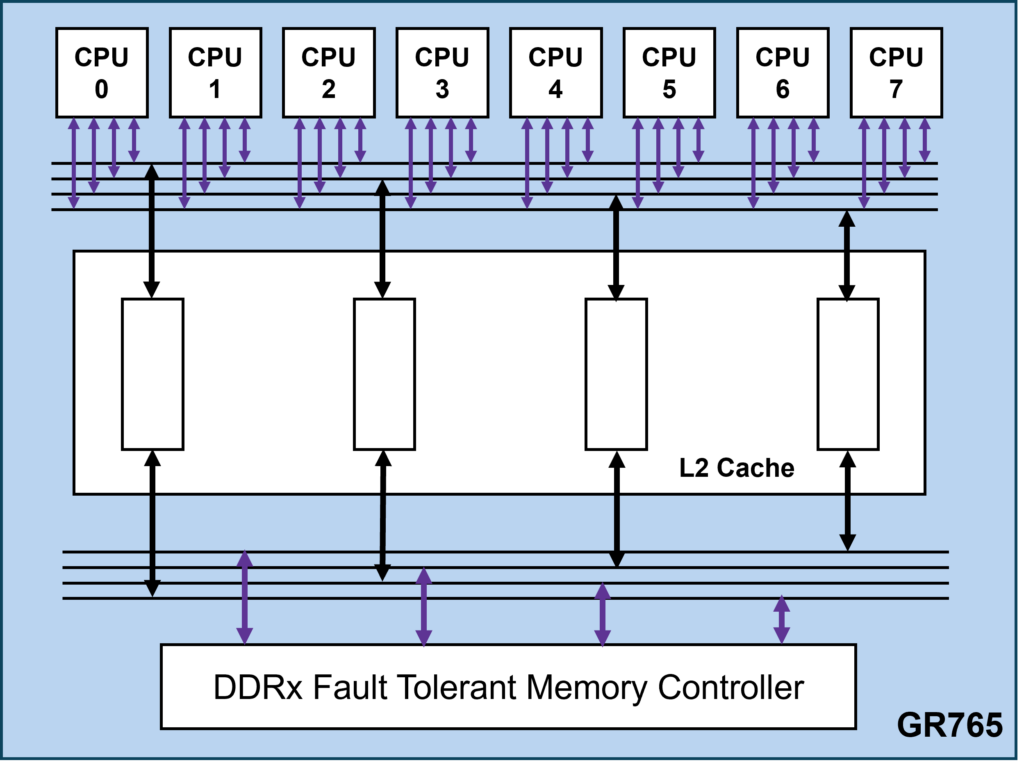

In response to these challenges, the GR765 introduces a striped interconnect between processor cores, traversing through the Level-2 cache and extending to the SDRAM memory controller.

The striped interconnect features four parallel buses, each representing a stripe. All processor cores are connected to each stripe, which in turn are each connected to a separate L2 cache pipeline. Similarly, each L2 cache pipeline has a separate stripe path towards the SDRAM memory controller.

By default, the system maps stripes to L1 cache lines: for instance, cache line 0 corresponds to stripe 0, line 1 to stripe 1, and so forth. This mapping improves average general processing performance by increasing the total available bandwidth to the memory subsystem.

Minimizing multi-core interference

The striped buses can also be configured to map each stripe to distinct memory areas. This means that by aligning the multicore software linking with the mapping of the stripes, bus transactions for a particular core can complete without being affected by concurrent traffic from other cores. This approach ensures that critical operations can proceed with minimal interference.

As an example, let’s consider a mixed-criticality platform, where critical partitions responsible for essential operations run on the same computer as non-critical partitions that perform payload computation task. In such scenario, CPU0 and CPU1 may be responsible for the critical application while the other cores are assigned to payload processing.

In this architectural setup, an effective strategy may involve assigning two of the stripes exclusively to the memory portion allocated respectively to CPU0 and CPU1. By doing so, any potential interference is prevented in the event of an L2 cache hit, and the interference channels directed towards the SDRAM memory are reduced to concurrency at the SDRAM data bus level. It should also be noted that accessing the SDRAM data involves several steps, including opening a row, reading or writing data, and closing the row when done. As the GR765 SDRAM memory controller can overlap operations such as row opening, closing, etc, the interference at the SDRAM data bus level is only related to a fraction of the total SDRAM access time.

In summary, by configuring the interconnect with mapped stripes and aligning software design with this configuration, the overall system becomes more predictable and isolated. This greatly simplifies worst-case execution time (WCET) analysis, providing a clearer understanding of the system’s behaviour under extreme conditions.

To also mitigate the minimal interference on the SDRAM data bus, the implementation of a time-slotted mode is planned. This mode restricts accesses to each memory bank to only 1/4 of the time, ensuring virtually zero interference between the designated stripes.

Conclusion

The striped interconnect provides higher memory bandwidth, allowing for improved processor performance. Further, it can be configured to allow isolated paths between the processor cores and external memory, removing multi-core timing interference. This feature is part of the GR765 octa-core, LEON/RISC-V rad-hard microprocessor and it is also available as part of the GRLIB IP library.