By Charles Qi, Cadence

With the growing popularity of applications such as mobile gaming and voice triggering, the audio/voice subsystem is playing a prominent role in many mobile system-on-chip (SoC) designs. The subsystem must be designed to meet dual demands: high-performance, high-resolution audio stream processing as well as always-on, low-power voice trigger and recognition. Customizable digital signal processing (DSP) and audio/voice subsystem solution intellectual property (IP) blocks can provide a cost-effective and efficient way to develop and deliver high-performance audio/voice products.

Over the last decade, the emergence of smartphones and tablets has driven considerable technology innovation. High-performance audio and video applications are the most essential for bringing personalization and consumer appeal to these devices. Emerging applications such as mobile gaming and voice trigger and recognition are pushing the requirements of audio/voice subsystem performance to two extreme ends of the spectrum. On the one hand, there's the growing demand for high-performance, high-resolution multi-channel audio stream processing. On the other hand, there's the demand for always-on voice trigger and speech recognition intelligence at extremely low power. The audio/voice subsystem design must deploy advanced digital signal processing (DSP) technology and a well-architected system solution to meet the growing demands.

Mobile audio/voice subsystem overview

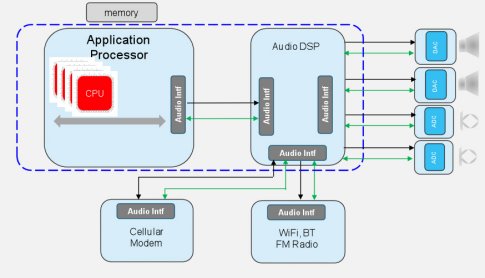

Figure 1 shows a representative mobile audio subsystem. The subsystem is centered on an audio DSP core that handles the main audio data processing, including stream encoding/decoding to different compression standards, sample rate conversion, pre- and post-processing, noise suppression, voice trigger/speech recognition, etc. The audio DSP core may or may not be integrated into the application processor system on chip (SoC). If it is integrated, the DSP core will be an offload processor sitting on the SoC bus hierarchy with access to the SoC's main memory system. If the DSP is not integrated, there is a dedicated bus interface to connect the application processor SoC to the stand-alone DSP. Audio peripherals such as the MICs and speakers are attached to the DSP core via a shared or point-to-point digital bus interface. The audio peripheral ICs contain the analog components, such as digital-to-analog converter (DAC)/audio-to-digital converter (ADC), analog filters, and amplifiers. In addition to connecting the audio peripherals, there may be audio interfaces to connect to the cellular baseband or WiFi/BT/FM radio combo devices to support voice calls (in smartphones) and Bluetooth audio or audio stream from FM radio.

Figure 1: Representative mobile audio/voice subsystem.

High performance, low-power audio data processing

The demand for high-performance DSP is driven by both voice and audio processing needs. On the voice side, the deployment of wideband (AMR-WB) and super wideband voice codecs for the support of high-performance voice over IP (VoIP) is coupled with the increasing requirements of noise suppression and noise-dependent volume control pre-processing. Such requirements are raising the audio DSP processing complexity by a factor of 2X to 4X. On the audio side, the codec complexity peaked with the introduction of multi-channel lossless versions, such as:

- Dolby TrueHD, MS10, MS11

- DTS Master Audio, M6, M8

There is, however, significant innovation in post-processing as shown in Table 1.

Table 1: Audio post-processing

The demand for high performance is driving audio DSPs to have more parallel, higher precision multiply-accumulation units (MACs).

While the performance demand is increasing, the demand for a lower power profile is also increasing from two perspectives:

- For high-end audio applications like mobile gaming or professional-grade audio playback, the system power profile cannot keep increasing linearly with the data-processing performance due to battery life restrictions of mobile devices

- In new applications such as voice trigger or speech recognition, the audio system needs to be always-on

Both perspectives require the DSP architecture to be extremely scalable and efficient. DSP architectures with scalable instruction set extensions, configurable memory/I/O partitions, and advanced power management features are the best to address both the high-performance and the low-power demands.

Low-power audio transport

In order to reduce the power profile and support low-power applications like voice trigger, the efficiency of audio data transport also needs to be considered in addition to the power efficiency of the audio DSP. From the audio subsystem topology, there are two optimization points to reduce the power of audio transport.

The first optimization point is to change the audio data transport model from a system memory based model to a DSP-tunneled model. With the system memory based model, the audio data pre- and post-DSP processing is placed into system memory across the SoC bus hierarchy. This model requires the system memory and SoC bus hierarchy to be always powered during the audio data processing. In this model, audio data also traverses the SoC bus hierarchy multiple times. Data access through the high-frequency SoC bus hierarchy and system memory consumes significant power and prevents efficient support of always-on applications. In the DSP tunneled model, the audio data processing and transmit/receive through the audio interface are localized to the DSP processor with dedicated local memory and highly efficient FIFO-style interfaces. The local system is clocked at much lower frequency and the power consumption of the subsystem is significantly reduced.

The second optimization point is to utilize new audio interface standards that are designed to support multiple audio peripheral devices with low I/O pin count and power efficiency. Recently, the MIPI Alliance has established two new audio interface standards, SLIMbus and SoundWire, to optimize audio subsystem connectivity. The SLIMbus standard targets the connectivity between the application processor and the standalone DSP codec. The SoundWire standard targets many audio devices, including DSP codec and audio peripheral devices. The SoundWire bus can be scaled up to support multiple data lanes to transport wide PCM audio samples between the application processor and the DSP codec. But it can also be optimized to support the transport of narrow PDM samples to MICs and speakers on a single data lane. The SoundWire standard defines a modified NRZI data encoding and double data rate for data transmission to minimize active driving and switching of the bus wire load. In addition, the standard contains a well-defined clock rate changing scheme and clock stop protocol to further minimize power consumption in always-on applications. The audio subsystem optimized by the SoundWire standard is shown in Figure 2.

Figure 2: SoundWire-based audio subsystem optimization

Building high-performance, low-power audio subsystems

High-performance, low-power audio subsystems have become essential elements of mobile and consumer devices. Emerging new applications, such as voice trigger and recognition and mobile gaming increase audio processing complexity even further. Audio subsystem design needs to embrace always-on features at very low power and deliver extremely high-quality audio effects for multiple audio channels.

Charles Qi is a System Solutions Architect in Cadence's IP Group. Prior to joining Cadence, Charles was VP of Engineering at Xingtera, an early-stage semiconductor start-up that specializes in the broadband-connected home based on PLC technology. Before joining Xingtera, he worked in Broadcom's Infrastructure and Networking Group as a security and SoC architect, with a focus on infrastructure SoC architecture.