By Aparna Tathod, Softnautics a MosChip Company

Nowadays, Containers has become a leading CICD deployment technique. By employing appropriate connections with Source Code Management systems (SCM) like GIT, Jenkins is able to start a build procedure each time a developer contributes his code. This method makes all environments accessible to new Docker container images that are generated. Using these images, it allows for quicker application development, sharing, and deployment by groups. The Global CICD tool market is expected to see significant compound annual growth rate (CAGR) of 57.38% from 2023 to 2029, according to the report published by market intelligence data research.

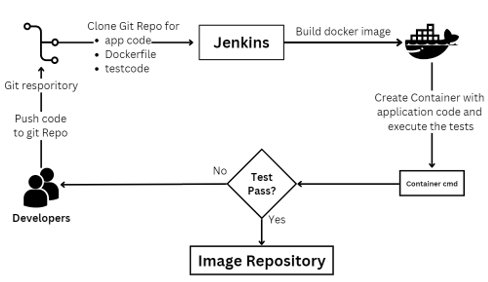

Docker containers help developers in creating and conducting tests for their code in any circumstance to find flaws early in the life cycle of an application. It speeds up the process, reduces build time, and enable engineers to perform tests concurrently. Also, it can be integrated with tools like Jenkins and SCM platforms, e.g., GitHub. Developers upload their code to GitHub, test it using Jenkins, and then create an image utilizing that code. To address inconsistencies between various environment types, this image can be added to the Docker registry.

QA automation faces an issue when configuring Jenkins to execute automated testing within Docker containers and retrieve the results. The best approach for automating the testing procedure in CI/CD will be explored in this article ahead.

Continuous Integration (CI)

Each commit a developer makes to a code repository is automatically verified using a process called continuous integration. In most cases, validating the code entails constructing and testing it. Keep in mind that tests must be completed quickly. That is due to the developer's need for prompt feedback on his changes. As a result, CI typically includes fake unit and/or integration tests.

Continuous Delivery (CD)

Continuous Delivery is a routine method for automatically releasing validated build artefacts.

After the code is built, integrated, tested, and passed, it is now appropriate to make build artefacts available. Naturally, a release must undergo testing before being deemed stable. As a result, we want to do release acceptance tests. Human and automated acceptance testing is available.

There are numerous considerations to remember:

- Depending on the application, a MySQL database may be required

- A method for determining whether automated tests are executed within a Success/Fail test cycle is required. Further stats may be needed.

- There must be a complete shutdown and removal of all containers built during a test run

Why to use containers?

Containers allow one to easily deploy code on multiple servers without purchasing additional hardware. Instead of purchasing two servers, you can deploy the code on a single server from a container. This reduces costs and makes scaling easier. Therefore, if a code only needs 1GB RAM but the server has 32GB, you waste the remaining 31GB of hardware. The idea of hardware virtualization was developed to prevent wastage.

However, there is still another waste: the code might not always use all RAM or CPU. Only 30% of the system's resources will be used more than 90% of the time. Containerization was introduced to help with this as well. Containerization leverages underutilised system resources and shares hardware resources.

Jenkins automates the CI/CD process. The question arises as to where to do the continuous delivery? So, this problem of where to do delivery is solved by “container”, “server” or virtual machine.

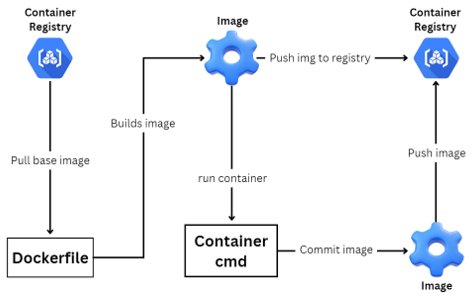

Understanding the Container lifecycle

The base image is present in the container registry. An appropriate base image selection is the first step in docker life cycle. The required base image is selected from the container registry. Docker-file is written by a user to create a new image for the application using the base image. Add the required packages and application dependencies layered on the image. An account or own container registry is created by user for storing built images.

A new fresh image is created using docker-file and pushed to the container registry. With newly built image, run a container to test the application. Once the task is complete, stop and delete the container.

If any modifications are made in application inside the running container and the process demands all the modifications, then another image of the currently operating container is to be created. A new image is created with a commit command. This image is pushed to the container registry for further processing.

Container lifecycle

How the process works?

The initial step is to run a Docker container that will execute automated tests and deliver test results and an overall code completeness report.

Use the “dockerize” function to wait for an application to get started, if your tests rely on another service, such as a database, to be accessible.

Integrating dependencies

An application can be made up of many containers that run various services (e.g., application, related database). Manually starting and managing containers can be time-consuming, so docker developed a useful tool to help speed up the process: Docker Compose.

Performing the automated tests

It’s time to start a Jenkins project! Use the "Freestyle project” type. Add a build step labelled “Execute shell” after that.

For accurately referencing containers, it is essential to mention the project name to differentiate test containers from others on the same host.

We can monitor the log file results from container hosting tests to determine when tests have truly finished running.

Diagram

Automated testing with container in continuous delivery

The following are the benefits of using containers for automation and QA.

- Containerization improves SDLC effectiveness and speed, which benefits businesses

- Because deployments are carried out in containers, it operates faster than Jenkins or any other tool. This makes it possible to deploy the same containers to various locations at once, which is incredibly quick as it leverages the Linux kernel.

- Due to the isolation provided by each container, maintaining tests and environments is simpler. The other containers won't be impacted by a mistake in one of the containers.

- There is no requirement to pre-allocate RAM to containers because containers provide a predictable and repeatable testing environment. The host operating system or the device that hosts the Docker image runs the containers.

- The ability to keep the majority of application dependencies and configuration information inside a container reduces environmental variables. Continuous integration ensures application consistency during testing and production as it is done in parallel with testing.

- Running new applications or automation suites in new containers is simple.

- If a problem arises, testers can send photographs instead of bug reports to developers (in this case, an image of the programme, possibly even at the time a test failed). To address the issue, multiple developers can work simultaneously on debugging it. This is made possible by creating 'n' number of containers using the same image, allowing efficient collaboration among the development team. The duplication process can be easily performed.

- Early reports of glitches and problematic alterations

- Automating repetitive manual test case execution

- QA engineers can conduct more exploratory testing.

Conclusion:

The adoption of containerized testing and the integration of Jenkins into the CI/CD process has revolutionized QA automation testing. By leveraging containers, developers can create and execute tests in any environment, leading to early flaw detection and faster application development.

About the Author

Aparna Tathod is a Senior Automation Engineer at Softnautics a MosChip Company and has 6+ years' experience as a DevOps Engineer. She has worked on AWS cloud, Docker, Kubernetes, Prometheus, Grafana, Linux, Python, QA, and automation projects. She is passionate about finding the root cause of complex issues.