Autonomous vehicles rely on high-quality data annotation to train AI/ML models for safe and intelligent driving. This blog explores how sensor data from cameras, LiDAR, and radar is annotated using techniques like 3D cuboids, polygons, semantic segmentation, and splines to help vehicles understand their surroundings. It also explains how sensor fusion, model training, and feedback loops turn raw data into real-time decisions. With deep expertise in edge AI and automotive systems, MosChip accelerates the development of reliable ADAS and autonomous solutions.

Data annotation is a process of labelling data, captured from various sources, in the form of images, video, text, and audio to train AI/ML models. Major AI task types include image classification, object detection, semantic segmentation, and labelling for multimodal data. One of the most safety-critical applications of data annotation lies in mobility and transportation.

AI/ML models learn to make accurate predictions by training on correctly annotated data, especially in supervised learning, where annotations define the ground truth. Applications like self-driving cars and virtual assistants depend on this precision; errors in annotation can lead to serious misinterpretations and unsafe outcomes. Accurate, high-quality annotation is essential in safety-critical domains like image recognition and autonomous driving, where even minor mistakes can have major consequences.

Data annotation follows a structured life cycle: data collection, labelling, quality check, model training, and feedback. Raw data is labelled manually or automatically, then verified for accuracy. The model is trained on this data, and performance feedback is used to refine labels. This loop improves learning and reliability across use cases.

The Role of Data Annotation in Autonomous Vehicles

In the automotive domain, Advanced Driver Assistance Systems (ADAS) span multiple levels of autonomy, from basic features like lane-keeping and adaptive cruise control to fully autonomous driving.

These systems depend heavily on data annotation to train AI/ML models that enable real-time perception and decision-making. Raw data is collected from a suite of sensors, including LiDAR, radar, cameras, ultrasonic sensors, and vehicle control actuators.

To develop accurate and reliable AI models, this raw sensor data must be carefully annotated to reflect real-world conditions with inputs like camera, LiDAR, or radar, requires ground truth labelling that tells the system exactly what it’s seeing, such as object type, position, or movement.

This is where Sensor fusion offers a multidimensional view of the environment, but AI models must be trained to interpret it accurately. This requires annotated datasets where each input from camera, LiDAR, or radar is labelled with ground truth data like object type, position, and speed. Camera images use bounding boxes for vehicles, pedestrians, and traffic signs; LiDAR point clouds are marked with 3D cuboids for spatial awareness; and radar data is annotated with velocity vectors or object IDs to track motion over time.

The fusion of these annotated inputs enables the ADAS model to understand complex driving scenarios and make context-aware decisions. For instance, as a vehicle approaches an intersection, multiple sensors collect data: cameras capture images, LiDAR maps the 3D surroundings, and radar detects object movement and speed.

In the data annotation process, bounding boxes are drawn around key objects like pedestrians, vehicles, and traffic signs in camera images. These boxes are then labelled with names such as “stop sign,” “pedestrian,” or “car.” Similarly, in LiDAR point clouds, 3D boxes are used to define the shape and distance of surrounding objects. Radar data may be tagged with motion attributes, like velocity. These labelled datasets help the AI model learn to recognize and respond to similar situations in real-time, ensuring accurate perception, safe behaviour, and adherence to automotive safety standards.

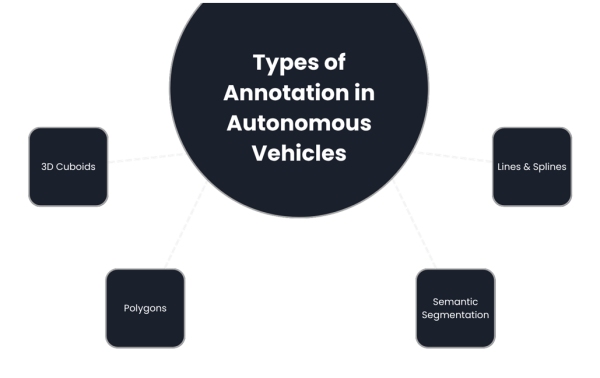

Types of Data Annotation in Autonomous Vehicles

1. 3D Cuboids

3D cuboid annotation is used primarily on LiDAR point cloud data and stereo camera feeds to capture the three-dimensional structure of objects such as vehicles, pedestrians, and obstacles. Annotators draw cube-like boxes around each object, labelling them with object type and spatial dimensions. This allows the perception model to learn not just object presence but also their depth, orientation, and volume in real-world coordinates. 3D cuboids are critical for collision avoidance and object tracking in ADAS and autonomous driving applications.

2. Polygons

Polygon annotation is a precise technique used to label complex and irregularly shaped objects by connecting multiple points around their exact contours. Unlike bounding boxes, which may miss fine details or include noise, polygons allow accurate labelling of objects like pedestrians, traffic signs, bicycles, and road boundaries.

This method is particularly valuable in autonomous vehicles, where it helps models detect edges, recognize small or overlapping objects, and understand cluttered scenes more accurately. It also enables annotators to mark contextual elements such as sidewalks, road curves, or obstructed areas. While polygon annotation improves model performance in vision-based systems, it is more time-consuming compared to simpler methods due to its detailed nature.

Types of Annotation in Autonomous Vehicles

3. Semantic Segmentation

Semantic segmentation is a high-precision data annotation technique that labels every pixel in an image with a specific class, such as "road," "vehicle," "pedestrian," or "sidewalk." Unlike bounding boxes or polygons, it enables a complete and detailed understanding of the scene, which is vital for autonomous vehicles navigating real-world environments.

This pixel-level labelling helps in lane detection, drivable area recognition, and accurate object boundaries, especially in complex scenarios like intersections or traffic-dense areas. Annotators typically work with a predefined class list to ensure consistency across frames. Beyond automotive, semantic segmentation is also gaining traction in fields like medical imaging, where precise region classification is critical.

4. Lines and Splines

To ensure autonomous vehicles navigate safely and follow traffic rules, it's essential to train AI models not just on object recognition but also on road structure understanding. Line and spline annotations are used to mark lane boundaries, road edges, and path guidelines by drawing linear or curved paths that reflect the actual road layout.

These annotations allow the vehicle to maintain lane discipline, perform accurate lane-keeping, and plan safe, collision-free trajectories. This type of annotation is especially vital for functions like highway autopilot, adaptive cruise control, and path planning. By learning from these labelled lanes and edges, the system can adapt to complex traffic scenarios and road conditions with higher reliability.

How to Choose the Right Data Annotation Method for Autonomous Driving Applications

When building fully autonomous (Level 5) vehicles, choosing the right method to label or "annotate" the data is a key step in training smart AI models. These vehicles rely on many sensors, cameras, LiDAR, and radar, to understand the world around them. Each sensor captures different types of information, and each needs a matching annotation method to help the AI learn accurately.

For example, when a car is driving down a street. The camera captures an image showing a pedestrian crossing the road. To help the AI recognize the pedestrian, we draw a rectangle (bounding box) around the person. It's quick and effective for identifying objects like cars, people, or bicycles, but not very precise around the edges. As the car continuous, the same camera sees a stop sign, which has an octagonal shape.

Instead of drawing a rectangle around it (which would include a lot of background), we use a polygon annotation to trace the exact edges of the stop sign. This gives the AI a much more accurate understanding of the shape, which is especially helpful for identifying road signs or not accurately shaped objects.

Meanwhile, the LiDAR sensor captures the depth and structure of the environment using 3D point clouds. To annotate these, we use 3D cuboids to show the position and size of other vehicles, cyclists, or obstacles in three-dimensional space. For mapping lane lines or road boundaries, lines and splines are drawn, helping the vehicle stay in its lane or plan paths.

If the goal is to identify every detail, like separating the drivable road from sidewalks or barriers, semantic segmentation is used to label each pixel in the image. These annotation types are chosen based on speed, the AI model’s needs, and the real-world driving scenario (urban streets, highways, etc.), making sure the vehicle understands its surroundings correctly.

Model Training: Turning Annotated Data into Intelligent Driving Decisions

Once sensor data from LiDAR, radar, and cameras is accurately annotated, it enters AI/ML pipelines to train perception and planning models. These datasets are divided into training, validation, and test sets to ensure the model generalizes well. Input-label pairs teach the model to recognize patterns, such as identifying pedestrians or lane

boundaries. Deep learning models like CNNs are used for image data, while 3D networks such as PointNet or VoxelNet handle LiDAR input.

During training, the model refines its predictions by comparing them against ground truth labels and adjusting weights accordingly. Performance is measured using metrics like precision, recall, F1-score, and intersection over union (IoU). If the model struggles with complex scenarios, like occlusions or curved roads, the feedback is used to refine annotations and improve future training. This iterative loop enhances model accuracy and robustness, making it a vital part of building safe and reliable autonomous vehicle systems.

As autonomous driving technologies evolve, the demand for high-precision, large-scale data annotation will continue to grow. Future annotation workflows will increasingly rely on AI-assisted labelling, active learning, and edge-cloud collaboration to accelerate dataset generation while maintaining accuracy.

Advancements in automated sensor fusion alignment, context-aware annotation tools, and real-time validation loops will further optimize training pipelines. With increasing regulatory focus on safety and reliability, robust annotation practices will remain central to developing explainable and ethically aligned AI systems, positioning data annotation not just as a preprocessing step but as a strategic foundation for the future of autonomous mobility.

MosChip has expertise with strong AI/ML solutions through its DigitalSky™ AI platform, enabling real-time intelligence at the edge. In the automotive domain, MosChip offers advanced solutions for Functional Safety (FuSa), ADAS (Advanced Driver Assistance Systems), and sensor fusion, supporting OEMs and Tier-1s in building smarter, safer vehicles. MosChip delivers platforms optimized for AI-driven perception, decision-making, and in-vehicle computing. Their capabilities help accelerate next-gen autonomous and semi-autonomous vehicle development with industry standards like ISO 26262.

To know more about MosChip’s capabilities, drop us a line and our team will get back to you.