1. Intro

In today’s connected world, where data is a crucial asset in SoCs, Part V of our series explores how to protect and encrypt data, whether at rest, in transit, or in use building on our earlier blog posts of the series: Essential security features for digital designers, key management, secure boot, and runtime integrity.

Why devote an entire segment to data encryption? Data encryption deserves its own focus because even a securely booted system is vulnerable if sensitive data remains in plaintext. Modern SoCs process critical assets like personal data, keys, and AI models that must be shielded from attackers, making robust encryption essential. Ultimately, data protection is both a security necessity and an engineering challenge that must be addressed early without compromising performance or efficiency. Data encryption also ties into a broader security strategy: secure boot ensures only trusted code runs, runtime integrity defends against runtime attacks, and key management supplies the secrets encryption needs. Together, these create a defense-in-depth approach that keeps data safe even if other layers are compromised. Ultimately, robust encryption is not just about avoiding hypothetical hacks, it’s about protecting your users and your brand, while enabling secure, high-performance system design.

In this article, we share practical guidance on implementing data protection and encryption across different data states, while mapping them to architecture features. We also cover design trade-offs and highlight common pitfalls to help engineers integrate encryption effectively and avoid costly mistakes.

1. Encryption Across different Data States

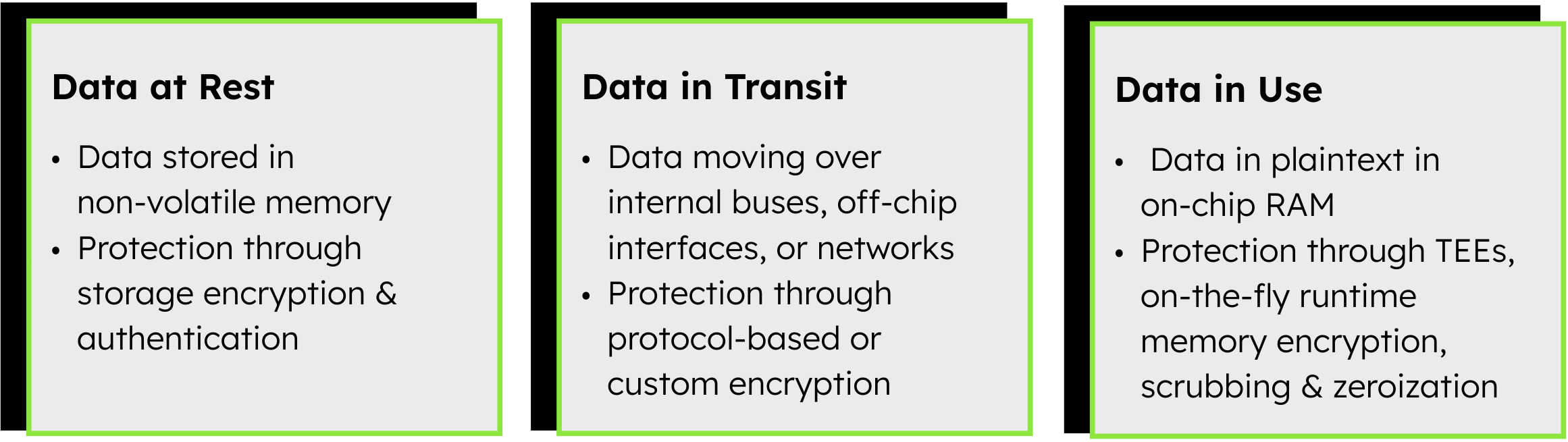

Data in an SoC can be in one of three broad states at any given time: at rest, in transit, or in use. A comprehensive SoC security architecture should address all three. This means encrypting stored data, securing data as it moves through the system or off-chip, and even protecting data while it’s being processed. Let’s break down each of these and see how they map to SoC design features.

2.1 Data at Rest: Securing Stored Data

Data at rest refers to information stored in non-volatile memory. Encrypting data at rest ensures that if an attacker physically extracts the storage or dumps memory contents, they cannot retrieve useful information.

A common way to protect data at rest is storage encryption using symmetric ciphers like AES. In secure SoCs, dedicated hardware in the memory controller transparently encrypts data as it’s written to external memory and decrypts it on read, so the CPU and software operate as usual. This is called on-the-fly encryption and simplifies development, reduces errors, and ensures all off-chip data remains encrypted.

Encryption at rest helps ensure confidentiality and can also deter unauthorized modifications. While encryption alone doesn’t guarantee integrity, combining it with authentication (like a MAC or digital signature) allows devices to detect tampering. This way, encrypted data is both confidential and verified before use, protecting against attacks on stored data.

From an SoC architecture perspective, implementing data-at-rest encryption involves selecting the right crypto IP and deciding where in the data path to integrate it. Some considerations include:

- External flash encryption: If your SoC boots from external flash, you may include an AES decryption block in the flash interface logic to decrypt code on the fly as it's fetched, preventing attackers from reverse-engineering firmware or extracting secrets.

- DRAM memory encryption: If sensitive data is stored in external DRAM, an inline memory encryption engine (IME) in the memory controller is recommended. Modern SoCs use IMEs to encrypt data going to DRAM and decrypt it on return.

- On-chip non-volatile storage: If the SoC has internal non-volatile storage for keys or configuration, these usually store already-encrypted values or key material that is only meaningful internally. Often a root key is stored in eFuse and used to decrypt other secrets on the fly (a key ladder mechanism).

Encrypting data at rest is essential for SoCs handling sensitive assets, as it prevents physical attacks from exposing usable data, and with dedicated hardware support, it can be implemented early with minimal performance impact.

2.2 Data in Transit: Protection on the Move

Data in transit (or data in motion) encompasses any data actively moving through the system – whether over an on-chip bus, between the SoC and external devices, or over a network interface. In an SoC, this could mean the communication between internal IP blocks or more commonly, data traveling off-chip via interfaces like SPI, I²C, USB, PCI Express, Ethernet, wireless radios, etc. Whenever data leaves a secure boundary (leaving the chip or traveling over a shared internal bus), there’s a risk it could be intercepted, observed, or modified. Encryption in transit ensures that even if an attacker taps into a communication channel, they cannot decipher or tamper with the data.

At system level, many communications already use encryption protocols – for example, TLS for network traffic, IPsec for secure IP communications, or MACsec for secure Ethernet communication. However, from an SoC designer’s perspective, the goal is to build hardware support that can implement or accelerate these protocols and ensure data is not exposed on the wires. Here are a few key areas and strategies for data in transit protection in SoCs:

- Secure high-speed interfaces: Modern high-speed serial interfaces like Ethernet (via MACsec) increasingly include native encryption and authentication to secure data in transit. Using IP cores with built-in encryption and key management for these links allows designers to achieve high throughput with minimal latency and area overhead, avoiding custom security implementations.

- General-purpose communication encryption: Not all data links have built-in security, so custom or slower interfaces (like SPI or UART) often require application-layer encryption, typically offloaded to SoC crypto accelerators. Hardware support (e.g., DMA-based AES engines or SSL/TLS offload) is essential to handle encryption efficiently without overloading the CPU.

- On-chip bus encryption/isolation: Inside an SoC, data moves over internal buses or NoC fabrics, which are usually considered safe but can be at risk if your threat model includes malicious IP or hardware trojans. Full on-chip encryption is rare due to complexity, but techniques like bus scrambling, secure bus segregation (e.g., ARM TrustZone filters), and dedicated secure paths help protect sensitive data. For example, a secure co-processor might use a private bus to secure memory, preventing compromised components from accessing secrets.

Securing data in transit means ensuring confidentiality and integrity for data moving on or off the chip. Designers should leverage standard security protocols in interface IP where possible, or include crypto accelerators to avoid CPU bottlenecks. This results in an SoC that protects against eavesdroppers and man-in-the-middle attacks at the hardware level.

2.3 Data in Use: Protecting Data During Processing

The third state, data in use, refers to data actively processed in on-chip RAM, and securing it is challenging because it must be in plaintext to function. Nonetheless, modern SoCs use isolation and runtime protection techniques to minimize exposure and prevent unauthorized access or tampering even if parts of the system are compromised.

- Trusted Executions Environments (TEE): TEEs are a primary way to protect data in use. They combine hardware and software to create isolated secure zones for sensitive code and data. For example, ARM TrustZone splits the system into a secure and a normal world, ensuring that even if the main OS is compromised, sensitive data processed in the TEE stays protected and inaccessible.

- On-the-fly memory encryption for runtime memory: Architectures like Intel SGX or AMD SEV use a Memory Encryption Engine to automatically encrypt RAM contents outside the CPU, preventing attackers from reading sensitive data even if they dump the memory. While not every SoC encrypts all data, selectively encrypting memory for critical subsystems (like secure DSPs or neural engines) greatly reduces the risk of physical or cold-boot attacks.

- Data scrubbing and zeroization: It’s also important to protect data in use by clearing sensitive information from registers and memory immediately after it’s no longer needed. Hardware and software should actively wipe cryptographic keys and secret buffers to prevent attackers from retrieving leftover data.

Data in use refers to data actively processed in plaintext in on-chip RAM, making it difficult to secure, but SoCs mitigate risks through isolation and runtime protection. Techniques like Trusted Execution Environments and on-the-fly memory encryption help keep sensitive data safe even if other parts of the system are compromised. Additionally, scrubbing and zeroization ensure secrets are wiped from memory and registers immediately after use to prevent recovery by attackers.

3. SoC Design Trade-offs and Considerations for Encryption

Implementing strong encryption in an SoC requires balancing security with performance, area, power, and design complexity. Understanding these trade-offs early helps optimize security architecture for your application.

3.1 Performance and Latency

- Performance impact: Encryption can become a bottleneck if done purely in software.

- Hardware acceleration: Dedicated crypto cores (e.g., AES or SHA-3 engines) enable fast, efficient encryption and hashing with minimal CPU load and latency.

- Pipelining & parallelism: Encryption hardware can process multiple bytes per cycle or use parallel cores to sustain high data rates (e.g., 10 Gbps) without bottlenecks.

- Latency-critical designs: Modes like AES-CTR and cut-through encryption minimize latency, crucial for real-time systems; avoid introducing delays into critical control loops.

- Boot time considerations: Decrypting large firmware at boot can slow startup, but using fast crypto accelerators and on-the-fly decryption mitigates this overhead.

3.2 Area and Power Costs

- Area overhead: A compact AES core uses only tens of thousands of gates — a tiny fraction of a modern SoC — and even multiple crypto engines typically consume just a few percent of total area, which is acceptable for most designs. In area-limited, low-cost chips, designers can use lightweight ciphers, shared or time-multiplexed engines, and small secure memory blocks (just a few KB) to minimize area impact.

- Power consumption: Cryptographic operations do add dynamic power, but dedicated hardware is far more energy-efficient per bit than using software. By optimizing cores for quick execution and using techniques like clock gating or DMA, designers can minimize active power and even reduce overall system energy in some workloads.

- Memory and key storage: Secure key storage adds some area and power overhead, but these costs are generally minor. It’s wise to budget a few percent extra power for security functions and ensure the power delivery can handle peak encryption loads.

- Design complexity cost: Adding encryption increases design verification and timing complexity, introducing extra engineering effort and extending the timeline. However, using proven IP cores helps reduce these risks and simplifies validation.

The area and power overheads of encryption are usually modest and justified by the significant security benefits. For most mid-to-large SoCs, a small area and power increase is acceptable to prevent data breaches and IP theft. High-performance designs often include full crypto suites (AES, SHA-3, RSA/ECC), while ultra-low-power systems may adopt minimal or shared engines and carefully control when they run. Plan for encryption early in your floorplan and power budget. With modern IP optimizations, encryption hardware is compact and efficient, security is no longer the elephant in the room but an essential and achievable design element.

|

Aspect |

Design Considerations & Strategies |

|

Performance & Latency |

|

|

Area & Power Costs |

|

|

Design Complexity |

|

Table 1 - SoC Design Considerations for Encryption

4. Common Pitfalls in Implementing SoC Data Encryption

Designing encryption into an ASIC or SoC is complex, and common mistakes can seriously undermine security.

- Using Weak or Outdated Algorithms/Incorrect Configurations: One pitfall is selecting an algorithm that is known to be broken or using too short of a key length to save some resources. Similarly, using a strong algorithm but in an insecure mode (or with a poor implementation) can undermine security.

- Poor Key Protection (Keys in the Clear): Keys should never be left in clear text in memory or firmware. Instead, store keys only in dedicated secure memory, and clear them after use. Prevent side-channel leaks with resistant designs and disable debug access to keys.

- Storing Keys and Data Together: Separate keys into secure subsystems to ensure a breach of one does not compromise both

- Improper or No Integrity Checking: Skipping integrity checks is a serious risk. Encryption alone doesn’t guarantee data hasn’t been tampered with. Always include authentication (e.g., AES-GCM) or separate MACs to ensure data integrity.

- Leaving Backdoors or Debug Modes: Leaving debug backdoors or test modes in production is another critical mistake. Remove them or secure them with strong challenge-response authentication, and avoid using universal debug keys shared across devices.

- Not Planning for Key Lifecycle (Rotation/Revocation): Not planning for key rotation or revocation leaves systems vulnerable if keys are compromised. Design in mechanisms to update keys and revoke certificates from the start, rather than trying to retrofit later.

- Ignoring Physical Security Aspects: Physical security is often ignored. Physical attacks (e.g., glitching, probing) can expose keys or bypass checks. Depending on your threat model, consider tamper sensors, encrypted on-chip buses, or protective mesh shields.

In short, avoiding these pitfalls means following best practices, using strong algorithms and libraries, isolating keys and designing against both software and hardware attacks — with no shortcuts. Regular reviews and testing are crucial, and by staying humble and thorough, you can build a secure, robust SoC and steer clear of most common crypto mistakes.

5. Conclusion

Securing data through robust encryption is not optional in modern SoC design — it’s a fundamental requirement to protect users, intellectual property, and brand reputation. It requires carefully integrating protection at every level and data encryption plays a central role. As we’ve explored in this article, data in an SoC moves through different states: at rest, in transit, and in use. Each state demands specific architectural strategies, from transparent memory encryption to secure communication protocols and runtime isolation mechanisms like TEEs.

At the same time, engineers must navigate real-world trade-offs: balancing performance and latency against area, power, and design complexity. With careful planning, using dedicated crypto accelerators, proven IP cores, and efficient design practices, strong encryption can be integrated without compromising system performance or user experience. Finally, avoiding common pitfalls, such as weak algorithms, poor key management, or forgotten debug backdoors is essential to ensure that encryption truly strengthens security rather than providing a false sense of protection. When designed thoughtfully, encryption is not just a technical checkbox but a cornerstone of trust: it protects your users, safeguards valuable IP, and reinforces your brand's reputation for security. By embedding data protection deeply into your SoC architecture from the start, you build a foundation for secure, future-proof devices that can withstand evolving threats.