Introduction

Software-Defined Vehicles (SDVs) are turning cars into distributed real-time computing platforms. Instead of dozens of standalone ECUs connected by a tangle of legacy field buses, we now see zonal controllers aggregating local I/O, centralized compute platforms running safety, mission critical software, and a service-oriented middleware layer stretching across the vehicle. All of which sits on top of a network that must behave much more like a deterministic datacenter fabric than a collection of CAN segments.

Higher bandwidth alone does not solve this problem. As you start running closed-loop control, sensor fusion, perception pipelines, diagnostics, and over-the-air updates on the same physical fabric, the critical question becomes: what happens to latency and jitter under load?. That is where Time-Sensitive Networking (TSN) comes in, TSN turns Ethernet from a best effort medium into a scheduled, globally synchronized fabric and the only way to make that work in a vehicle at scale is to implement the right TSN infrastructure directly in silicon.

TSN as the deterministic layer of Software Defined Vehicles

Standard Ethernet is indeterminate by design. Frames contend for bandwidth, queues build unpredictably, and there is nothing to stop a safety-critical message getting stuck behind a burst of best-effort traffic. In an automotive control loop, that is not acceptable.

TSN augments Ethernet with three pillars: a global sense of time, time- and class-aware forwarding, and redundancy. The global time base comes from IEEE 802.1AS (and its revisions), which distribute a synchronized clock across all endpoints. Hardware timestamping and a precision hardware clock in every switch and end-station keep that time aligned within microseconds across the vehicle.

On top of that, the forwarding plane becomes time-aware. 802.1Qbv defines the Time-Aware Shaper, which uses gate control lists per egress queue so that different traffic classes are allowed onto the link only during specific time windows. Combined with 802.1Qav/Qci/Qch features for shaping and policing, and 802.1Qbu frame pre-emption, the switch can give hard guarantees to some streams while still carrying audio/video and best-effort traffic on the same physical link. Finally, mechanisms like 802.1CB frame replication and elimination introduce controlled redundancy so that a single link or path failure does not compromise a safety-critical function.

Viewed together, TSN turns the in-vehicle Ethernet network into a deterministic fabric: bounded latency, controlled jitter, and predictable behaviour even under worst-case load. For SDVs this is not a “nice to have”; it is the prerequisite for overlaying software-defined services on top of the network without compromising safety.

TSN Infrastructure Across the Software Defined Vehicle Hierarchy

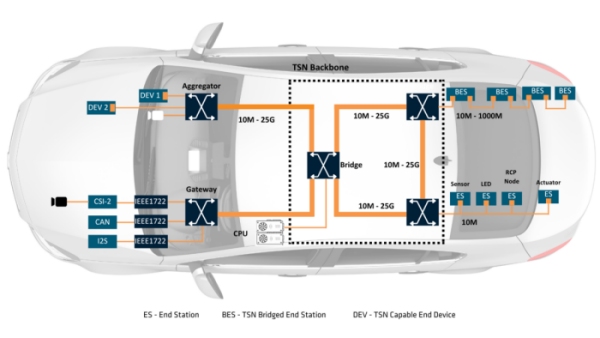

Real SDV architectures are not just a flat TSN domain. They are built as a hierarchy of TSN-aware components, each with different constraints and responsibilities: leaf end-stations at the edge, zonal controllers and gateways in the middle, and backbone switches and central compute at the top.

Figure: Key components of the centralized compute based zonal architecture

At the edge, leaf end-stations are often sensors and actuators that do not justify a full-blown Gigabit interface. Single-pair 10 Mbit/s links like 10BASE-T1S are attractive here for cost, wiring, and EMC reasons. Even so, these nodes cannot be “dumb”. They must participate in the 802.1AS time domain, provide basic TSN queuing and scheduling on their transmit path, and offer at least minimal timestamping and diagnostics. The challenge is to do this in a few tens of thousands of gates, with tight power and cost constraints.

In the middle of the hierarchy sit the zonal controllers and domain gateways. These chips are the convergence point for heterogeneous traffic: CAN FD and LIN segments, local 10BASE-T1S links, and one or more 100M/1G uplinks towards the backbone. Functionally, they must be both a TSN switch and a protocol gateway. On the switch side, they need multiple ports, per-queue gate control lists for 802.1Qbv, policing and shaping for each class or stream, pre-emption support, and a data path designed to keep internal latency and jitter under control. On the gateway side, they map CAN or LIN frames into Ethernet streams, often using IEEE 1722 or related formats, assign stream identifiers and priorities, and inject those streams into the TSN schedule in a way that respects both the bus timing and the Ethernet cycle times. They are also usually boundary or transparent clocks in the 802.1AS sense, and they need to expose visibility into per-port timing and statistics for validation and debugging.

At the top, backbone switches and central compute SoCs tie the whole vehicle together. Here the emphasis shifts from extreme cost sensitivity to port count, aggregate throughput, and interaction with software. Backbone devices may expose a dozen or more ports at 1 Gbit/s or above, serve multiple logical networks through VLANs and TSN classes, interoperate with security blocks such as MACsec, and interact with hypervisors or container orchestrators. The silicon here typically implements a high-port-count TSN switch fabric and one or more traffic-aware DMA engines so frames are moved between memory and ports in time to meet the schedule, without making timing dependent on general-purpose CPU response.

Taken as a whole, TSN infrastructure in an SDV is a layered system: lightweight TSN-capable MACs at the edge, integrated switch + gateway logic in zonal controllers, and larger TSN fabrics at the backbone with tight coupling to compute and security.

Design Considerations for TSN-Based Software Defined Vehicle Silicon

Once you decide to build a TSN-based architecture, the hard work starts in the details. Architects who want real determinism—not just a TSN logo on a slide—have to reason carefully about timing, queuing, integration of legacy buses, and the split between hardware and software.

The starting point is an explicit end-to-end timing model. For each class of traffic, you need a latency budget, including worst-case queuing, serialization, and propagation across all hops. That budget then informs the design of your TSN schedules: cycle times, gate open/close windows, and ordering of traffic classes across ports. The more hops in the path, the more careful you must be to avoid accumulating jitter through poorly aligned schedules.

Queue and buffer architecture is another critical dimension. Per-port and per-class queuing is a minimum; in some systems, per-stream queues are required to separate safety-critical flows from each other. The switch data path must be designed to support cut-through or store-and-forward behaviour where appropriate, manage head-of-line blocking, and bound the impact of one misbehaving stream on others. Integrating frame pre-emption adds further complexity, as express and preemptable traffic share the same physical link.

Legacy integration deserves its own design pass. CAN and LIN have their own schedules, priorities, and error behaviours. When a gateway batches up multiple bus frames and injects them into the TSN network, you can see bursts that interact in non-trivial ways with Ethernet schedules. You need to understand how bus timing and TSN cycle timing interact, what happens in overload or error conditions, and how to guarantee that gateway buffering doesn’t destroy determinism.

Then there is the hardware–software split. Some OEMs prefer static TSN schedules and treat network configuration as part of the vehicle’s “wiring harness description”. Others want dynamic control via a central TSN controller or SDN-like orchestration. In both cases, the control plane must interact with the switch and gateway IP through a clear programming model: registers, tables, and event mechanisms that can update GCLs, stream filters, and policing parameters without destabilizing the fabric. The DMA or SDMA engines that move data between system memory and switch ports must be driven in a time-aware way, with careful attention to interrupt latency, cache effects, and multi-core interactions.

Finally, there is scalability. The same architectural ideas should scale from a three-port switch in a zone controller to a sixteen-port backbone device, and from 100 Mbit/s to multi-Gigabit single-pair links over time. TSN IP that cannot scale in this way will either limit future designs or force expensive re-architectures.

Comcores’ TSN IP core offerings for Software Defined Vehicle Infrastructure

Comcores focuses precisely on these building blocks: deterministic Ethernet IP cores that SoC and ASIC teams can integrate into their designs to get TSN right from the first silicon spin.

For zonal and backbone roles, Comcores provides a 1G TSN Ethernet Switch IP that implements the necessary 802.1Qbv/Qci/Qbu features on a multi-port switch core. The data path supports cut-through forwarding for low latency, and the associated SDMA engine handles descriptor scheduling and buffer management in a way that respects TSN timing, decoupling critical paths from general software jitter. The same architecture can be instantiated in compact form for three-to-five-port zonal controllers or scaled up for higher-port devices.

At the edge, Comcores offers a 10 M / 10BASE-T1S end-station IP designed for leaf nodes. It provides the MAC-level functionality needed for TSN participation—basic queuing, time-stamping, PTP support—within the area and power budgets of small local microcontrollers or sensor ASICs. This lets designers move from proprietary point-to-point links to standards-based Ethernet even at the lowest tier of their architecture.

Between legacy buses and Ethernet, Comcores’ IEEE 1722 Gateway IP handles the protocol translation required to map CAN FD, LIN, and other sources into synchronized Ethernet streams. It deals with stream identification, timing, buffering, and class mapping, and is designed to integrate tightly with the TSN switch core, minimizing latency and avoiding avoidable jitter at the gateway boundary.

Underneath these blocks, the Comcores SDMA Engine IP provides the time-aware data movement that ties everything together. It is responsible for ensuring that descriptors and payloads are in the right place at the right time, that deadlines are met even when CPUs are busy, and that statistics are available for verification and monitoring. In practice, this means deterministic, hardware-driven data paths that match the TSN schedules rather than hoping the operating system keeps up.

Taken together, these IP cores allow semiconductor companies and Tier-1s to construct end-stations, zonal controllers, central gateways, and backbone switches that behave as a coherent TSN infrastructure for SDVs, instead of a mosaic of loosely connected building blocks.

Key Takeaways & Conclusion

Software-Defined Vehicles push the in-vehicle network to behave like a real-time services fabric; raw bandwidth is not enough, and deterministic behaviour must be guaranteed in silicon. Time-Sensitive Networking provides this determinism by adding a global time base (802.1AS), time- and class-aware forwarding (802.1Qbv/Qav/Qci/Qbu), and redundancy (802.1CB), turning standard Ethernet into a scheduled, predictable backbone suitable for safety-critical automotive workloads.

In practice, SDV networking is a hierarchical TSN infrastructure: lightweight TSN capable 10BASE-T1S end-stations at the edge, zonal controllers that combine multi-port TSN switching with gateway functions for CAN/LIN and 1722 stream mapping in the middle, and high-port-count TSN switch fabrics tightly coupled to central compute and security at the backbone. Achieving real determinism across this hierarchy demands explicit end-to-end timing models, carefully designed queue and buffer architectures, thoughtful integration of legacy buses, a clean hardware–software split for TSN control, and IP that scales from small zonal devices to multi-Gigabit backbones.

Comcores’ TSN portfolio of 1G TSN Ethernet Switch IP, 10 M / 10BASE-T1S end-station IP, IEEE 1722 Gateway IP, and SDMA Engine IP are designed to implement exactly this infrastructure, enabling SoC and ASIC teams to build SDV ready end-stations, zonal controllers, gateways, and backbone switches where determinism is a property of the hardware rather than a best-effort outcome of the software stack.